Mass-Storage Systems

Overview of Mass Storage Structure

Magnetic disks provide bulk of secondary storage of modern computers:

§ Drives rotate at 60 to 200 times per second

§ Transfer rate is the rate at which data flow between drive and computer

§ Positioning time (random-access time) is time to move disk arm to desired cylinder (seek time)

and time for desired sector to rotate under the disk head (rotational latency)

§ Head crash results from disk head making contact with the disk surface

§ Disks can be removable

§ Disk drives are addressed as large 1-dimensional arrays of logical blocks,

where the logical block is the smallest unit of transfer

§ The 1-dimensional array of logical blocks is mapped into the sectors of the disk sequentially.

Sector 0 is the first sector of the first track on the outermost cylinder.

Mapping proceeds in order through that track, then the rest of the tracks in that cylinder and

then through the rest of the cylinders from outermost to innermost.

Disk Scheduling Algorithms

The operating system is responsible for using hardware efficiently.

For the disk drives, this means having a fast access time & disk bandwidth.

ü Access time has two major components:

Seek time is the time for the disk to move the heads to the cylinder containing the desired sector

Rotational latency time waiting for the disk to rotate the desired sector to the disk head

We like to minimize seek time.

ü Disk bandwidth is the total number of bytes transferred divided by

the total time between the first request for service and the completion of the last transfer.

Several algorithms exist to schedule the servicing of disk I/O requests.

We illustrate them with a Request Queue (cylinder range 0-199):

98, 183, 37, 122, 14, 124, 65, 67

Head pointer: cylinder 53

êFCFS

Illustration shows total head movement of 640 cylinders

êSSTF

Selects the request with the minimum seek time from the current head position

SSTF scheduling may cause starvation of some requests

Illustration shows total head movement of 236 cylinders

êSCAN

The disk arm starts at one end of the disk, and moves toward the other end,

servicing requests until it gets to the other end of the disk,

where the head movement is reversed and servicing continues.

SCAN algorithm sometimes called the elevator algorithm.

Illustration shows total head movement of 208 cylinders

êC-SCAN

Provides a more uniform wait time than SCAN

ü The head moves from one end of the disk to the other, servicing requests as it goes

ü When it reaches the other end, however, it immediately returns to the beginning of the disk,

without servicing any requests on the return trip.

Treats the cylinders as a Circular list that wraps around from the last cylinder to the first one

êC-LOOK

ü Version of C-SCAN

ü Arm only goes as far as the last request in each direction,

then reverses direction immediately, without first going all the way to the end of the disk.

Selecting a Disk-Scheduling Algorithm

ü SSTF is common and has a natural appeal

ü SCAN and C-SCAN perform better for systems that place a heavy load on the disk

Network-Attached Storage

· Network-attached storage (NAS) is storage made available over a network

rather than over a local connection (such as a bus)

· Implemented via remote procedure calls (RPCs) between host and storage

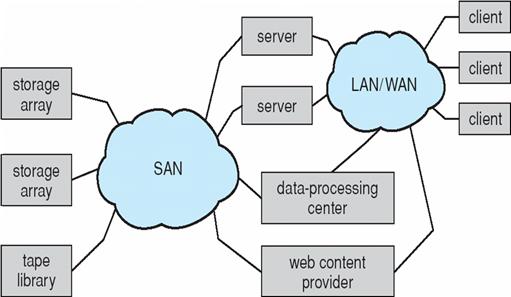

Storage Area Network

RAID (Redundent Arrays of Independent Disks) Structure

· multiple disk drives provides reliability via redundancy

Increases the mean time to failure

· improve performance and reliability of the storage system by storing redundant data

· Mirroring or shadowing keeps duplicate of each disk

· small number of hot-spare disks are left unallocated,

automatically replacing a failed disk and having data rebuilt onto them

Stable-Storage Implementation

To implement stable storage:

ü Replicate information on more than one nonvolatile storage media with independent failure modes

ü Update information in a controlled manner to ensure that we can recover the stable data after any failure during data transfer or recovery.

Tertiary Storage Devices

· Low cost is the defining characteristic of tertiary storage

· Generally, tertiary storage is built using removable media

· Common examples of removable media are floppy disks and CD-ROMs; other types are available

Removable Disks

· Floppy disk — thin flexible disk coated with magnetic material, enclosed in a protective plastic case

Most floppies hold about 1 MB;

· Removable magnetic disks can be nearly as fast as hard disks,

but they are at a greater risk of damage from exposure

· Magneto-optic disk records data on a rigid platter coated with magnetic material

The magneto-optic head flies much farther from the disk surface than a magnetic disk head,

and the magnetic material is covered with a protective layer of plastic or glass; resistant to head crashes

· Optical disks do not use magnetism; they employ special materials that are altered by laser light

WORM Disks

· The data on read-write disks can be modified over and over

· WORM (“Write Once, Read Many Times”) disks can be written only once

· Thin aluminum film sandwiched between two glass or plastic platters

· To write a bit, the drive uses a laser light to burn a small hole through the aluminum;

information can be destroyed by not altered

· Very durable and reliable

· Read-only disks, such as CD-ROM and DVD, come from the factory with the data pre-recorded

Magnetic tape

· Was early secondary-storage medium

· Relatively permanent and holds large quantities of data

· Access time slow

· Random access ~1000 times slower than disk

· Mainly used for backup, storage of infrequently-used data, transfer medium between systems

· Once data under head, transfer rates comparable to disk

· 20-200GB typical storage

· Compared to a disk, a tape is less expensive and holds more data, but random access is much slower

· Large tape installations typically use robotic tape changers that move tapes between tape drives and storage slots in a tape library

Tape Drives Application Interface

· The basic operations for a tape drive differ from those of a disk drive

· locate() positions the tape to a specific logical block,

· The read_position() returns the logical block number where the tape head is

· The space() operation enables relative motion

· Tape drives are “append-only” devices; updating a block in the middle of the tape also effectively erases everything beyond that block

· An EOT mark is placed after a block that is written

Hierarchical Storage Management (HSM)

· A hierarchical storage system extends the storage hierarchy beyond primary memory and secondary storage to incorporate tertiary storage — usually implemented as a jukebox of tapes or removable disks

· Small and frequently used files remain on disk

· Large, old, inactive files are archived to the jukebox

· HSM are found in supercomputing centers that have enormous volumes of data.

Speed

· Two aspects of speed in tertiary storage are bandwidth and latency

Sustained bandwidth – average data rate during a large transfer;

Effective bandwidth – average over the entire I/O time, including seek() or locate(), and cartridge switching

Access latency – amount of time needed to locate data

· Access time for a disk – move the arm to the selected cylinder and wait for the rotational latency;

< 35 milliseconds

· Access on tape requires winding the tape reels until the selected block reaches the tape head;

tens or hundreds of seconds

· Generally random access within a tape cartridge is about a thousand times slower than random access on disk

Reliability

· A fixed disk drive is likely to be more reliable than a removable disk or tape drive

· An optical cartridge is likely to be more reliable than a magnetic disk or tape

· A head crash in a fixed hard disk generally destroys the data,

whereas the failure of a tape drive or optical disk drive often leaves the data cartridge unharmed

Cost

· Main memory is much more expensive than disk storage

· The cost per megabyte of hard disk storage is competitive with magnetic tape.